The field of artificial intelligence is rapidly evolving, bringing with it both exciting innovations and new challenges. As AI systems become more complex and integrated into corporate applications, effectively managing their security is more critical than ever. To help navigate this dynamic landscape, this blog post breaks down eight common security themes providing a clear framework for building a more resilient and secure AI strategy.

Review of Published Frameworks and Guidelines

A number of frameworks for AI Cybersecurity have emerged in the last couple of years. I have been spending time for last few months to grasp the essence of many, including but not limited to the following:

- Google Security AI Framework (SAIF) – Describe 15 AI risks and detailed descriptions of each of these risks. Google’s Secure AI Framework (SAIF) provides an excellent guide to developing secure and responsible AI applications. SAIF focused on four components for AI development: Data, Infrastructure, Model and Application.

- Databricks AI Security Framework (DASF) – Blog post about Databricks AI Security Framework listing 60+ controls.

- ISO/IEC 42001:2023 Information technology – Artificial Intelligence – Management System

- NIST AI Risk Management Framework (AI-RMF)

- IBM Framework for security AI Applications using a concept of AI pipeline. The IBM framework helps in understanding how attackers will target AI systems/applications at each stage of the pipeline and how to defend it.

- MITRE ATLAS Matrix covering tactics used by attackers and how attacks progress. It is very important to understand “the other side” so that you can protect against attacks.

- OWASP GenAI Security Project – A broad set of guidelines for security of AI systems, adoption, governance, threat intelligence and incident response.

- OWASP Top 10 for LLM – List of top ten vulnerabilities for Large Language Models (LLM)

- CSA AI Controls Matrix – Focused on Cloud-based AI systems, vendor agnostic.

While exploring these frameworks, one can realize that each of these frameworks is looking at Cybersecurity challenges through a certain lens. However, there are eight common themes that emerge from the review of these frameworks and are described below.

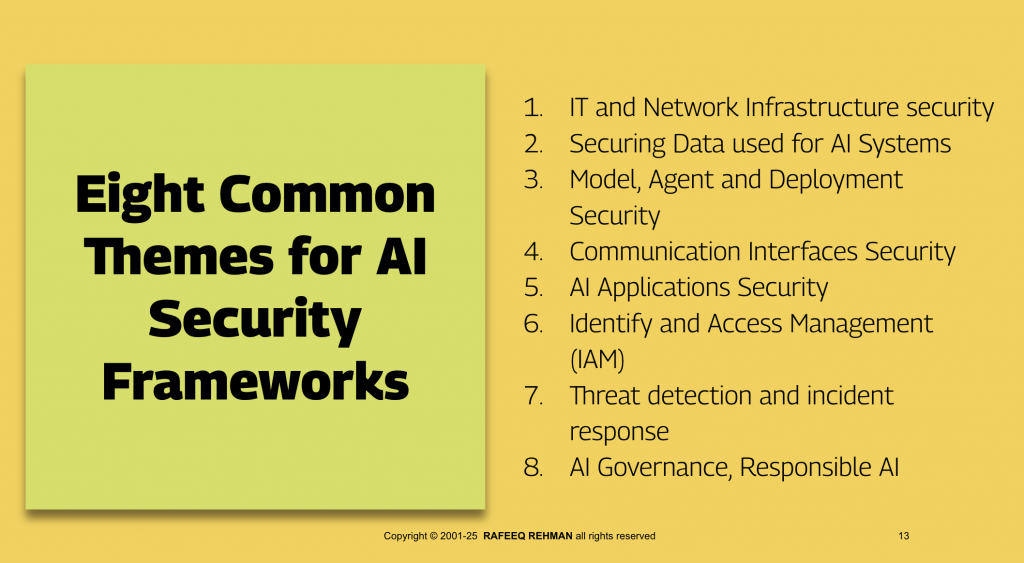

Common Themes for AI Security Frameworks

Following is a list of eight common themes for AI security strategy. Organizations should develop their AI security policy, standards, guidelines, and controls around this for comprehensive and end-to-end protection of AI systems.

- IT and Network Infrastructure security – These risks are associated with network infrastructure on which GenAI models and applications run. These risks always existed and are not specific to AI. This is about traditional infrastructure security which also applies to AI systems. It includes asset management, vulnerability management, patching, network security, firewall, Cloud configuration management and other traditional mechanisms.

- Securing Data used for AI Systems – Data fuels for AI systems and is key to training AI models. Data security applies to collection, cleaning, and organizing data for model training. The Retrieval Augmented Generation or RAG uses a vector database to store embedding data and has its own security implications. There are enhanced concerts about data privacy and ethical/responsible use which requires that the data is clean, unbiased, and not poisoned.

- Model, Agent and Deployment Security – Protecting model itself and ensuring source code security, model theft, model deployment and preventing denial of service attacks against the model.

- Communication Interfaces Security – New communications interfaces and protocols are emerging which are very specific to AI. These include but not limited to Agent to Agent communications, security of tools/plugin/helpers.

- AI Applications Security – End users typically interact with AI models through applications built on top of AI technologies. Protection of these applications against AI-specific attacks, in addition to traditional application security (such as prompt injection, model bypassing, guardrails etc.)

- Identify and Access Management (IAM) – With the emergence of AI Agents, they will assume their own identities. Managing these new types of identities and controlling access to models, other agents, and applications.

- Threat detection and incident response – AI threat detection goes beyond traditional SIEM deployments. The incident responses for AI systems should be prepared and practiced. OWASP and NIST guidelines are a good start.

- AI Governance, Responsible AI – Ensuring responsible and ethical use of AI, using approved and authorized models, approve use cases, ensure ROI and business outcomes.

A neutral framework built on these eight themes is needed.

References

- Removing barriers to American Leadership in Artificial Intelligence

- EU Artificial Intelligence Act

- GenAI Risk Categories