Less sophisticated AI models are just OK for regular use, reducing the cost. In many cases, self-hosted open source models could actually be good enough for many use cases. This articles shows research from Epoch AI, a non-profit organization.

Two Main Phases of AI Model Lifecycle

The AI Models have two main phases of their lifecycle: Training and Inference.

Training is the “learning” phase which is completed before a model is made available to the public. Inference is the “use” phase of the model and happens when a user prompts a model to get a response.

Why Understand this Distinction?

When a user pays for the use of an AI model, the cost is usually associated with the “inference” phase of the lifecycle of a model. Note that the “Inference” is much faster than “Training” and has a comparatively very low cost.

What is a Token?

A Token is a basic unit of text that a Large Language Model (LLM) understands. Tokens could be whole words, a punctuation, or a single letter. When a person sends a prompt to LLM, it is divided into tokens using a process called tokenization.

Why are Tokens Important?

- Most of the AI vendors charge users by the number of tokens processed.

- A context window of a model uses “number of tokens” to measure its capacity/size.

- Different LLMs have different “costs per token” (cheaper vs. expensive models) based upon their capabilities.

- Tokens are different from “parameters”, which are a measure of a model’s size or capacity. Parameters, unlike tokens, are fixed for a model and show size of the internal neural network used by LLM.

- Complicated tasks usually require more tokens than simpler tasks. Reasoning models require more tokens than older models.

Inference Costs are Falling

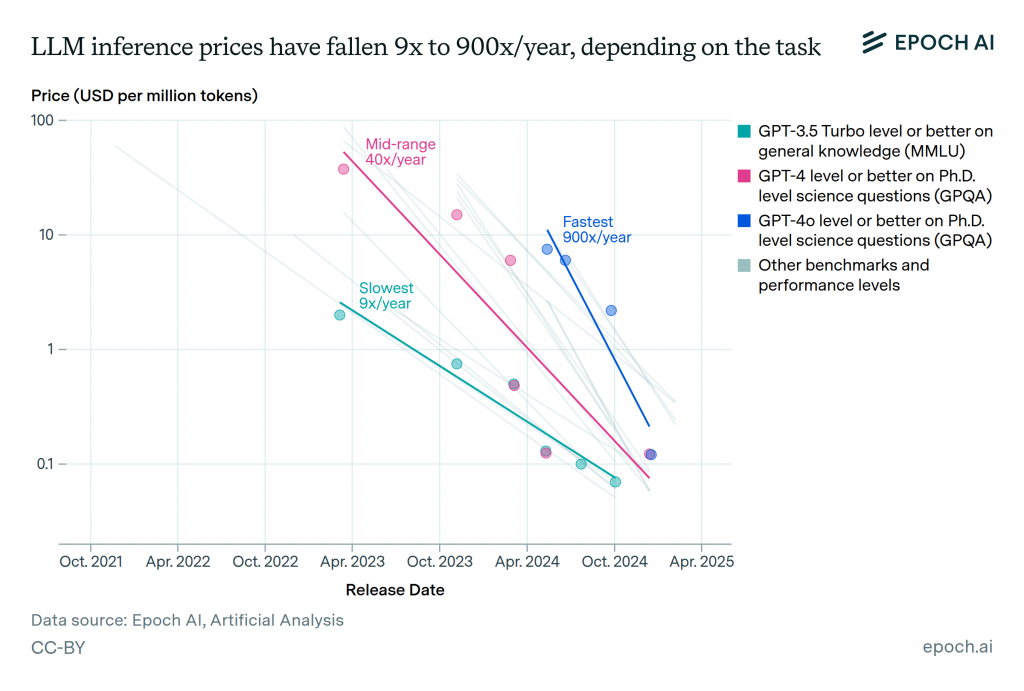

The cost of inference is falling, but is highly dependent upon which model you prefer to use (even from the same vendor). The modern reasoning models consume more tokens than the older models. The attached image shows pricing trends on an algorithmic scale for different LLMs.

Why Are Less-Smart Models OK in Most of the Cases?

The majority of common prompts (an average user use case) don’t require advanced LLMs with reasoning capabilities. These could use low-cost LLMs reducing the cost.

Recommendations for Picking a Model for AI Applications

When building AI applications, businesses should look into the possibility for using a combination of models instead of relying on a single option. This will help manage cost of the application.

- Self-hosted open source models for simpler tasks and where data needs to be kept private.

- Third parties hosted simpler models for regular tasks.

- Complex reasoning models for complicated tasks.

—-

Image Reference: Ben Cottier et al. (2025), “LLM inference prices have fallen rapidly but unequally across tasks”. Published online at epoch.ai. Retrieved from: ‘https://epoch.ai/data-insights/llm-inference-price-trends’ [online resource]